From the Item Bank |

||

The Professional Testing Blog |

||

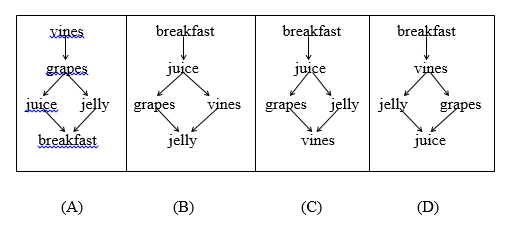

Translation vs. Test Adaptation: What’s the Difference?April 14, 2016 | |While translation is necessary and usually the main focus in a test adaptation/localization project, a perfect translation may not be sufficient to yield an equivalent assessment instrument or comparable results. The reason for this is simple: translation ≠ adaptation. One of my favorite examples to demonstrate the difference between translation and adaptation comes from some pilot work that I helped with many years ago. In this example, the test question presented examinees with a list of functionally related words. Lists could range in length from 4 to 8 words. The examinees were instructed to think about the words and then to select one diagram from four possible choices that best depicted the words in a logical, descending order. In this case, the source language was English and the target language was Spanish. The English version of the item was piloted in the United States with English-speaking high school students. The item was translated into Spanish and was then piloted in Mexico with Spanish-speaking high school students. The wordlist of this particular question contained five words: grapes, jelly, juice, breakfast, and vines. This list of words was forward- and then back-translated, from English into Spanish, and then back into English. A group of bilingual subject matter experts reviewed and approved the translation process and final word choices. The English version of the item looked like this. The key was option A.

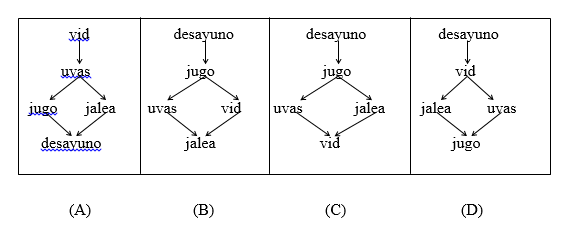

The translated version of the item looked like this:

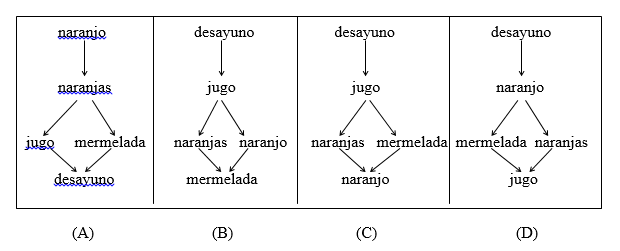

Again, the key was Option A. However, when data were analyzed, the classical difficulty index (p-value) of the English version was 0.80 and the p-value of the translated item was only 0.25. The first reaction of the Content staff was to verify the integrity of the translation. Indeed, juice=jugo, breakfast=desayuno, grapes=uvas, vine=vid, jelly=jalea. So what went wrong? How could “identical” items perform so differently? Population equivalence wasn’t an issue, so what was impacting item difficulty? To answer these questions, a committee of bilingual, bi-cultural subject matter experts was convened in Mexico. This item, along with a few others that had behaved differently than expected, was presented to the panel for review. In short order, the committee pointed out the problem: grape juice is not a typical breakfast beverage in our target area of Mexico. Orange juice was. The item was then adapted to fit the target population and the word list became juice, breakfast, oranges, orange trees, and marmalade. The adapted version of our item looked like this:

After a second round of piloting, the p-value of this adapted item was 0.80, the same as the source item in English. Translation was critically important in the adaptation process, and despite being an impeccable translation, it failed to be a good cultural fit. Translation may be necessary, but it is not necessarily sufficient in test adaptation.

This post is the second in a two part series on Test Adaption. Tags: Adaptation, Test Adaption Categorized in: Industry News, Test Development |

||

Comments are closed here.