From the Item Bank |

||

The Professional Testing Blog |

||

Inventory Planning in Testing: How Many Items Do We Need to Write?December 20, 2015 | |It’s one of the most basic questions in planning and maintaining an examination program: How many items do we need to write, review, and pretest? Write too many, and you’re expending resources on inventory that will go stale. Write too few, and you can’t assemble the requisite number of test forms to your specifications. Getting Started

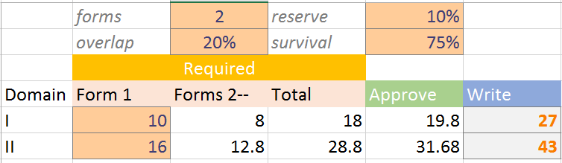

Let’s assume a domain requires 10 items, and that you require two forms with 20 percent overlap. That means you will use 18 items overall. Say you want a 10 percent reserve for enemies. So you must approve 19.8 or 20 items. Allowing for only 75 percent to be approved (survive review), you will need 20 ∕ 0.75 or 27 items written in that domain.  Figure 1. You may calculate the number of items your subject-matter experts should write using a simple table. The constraints are shown in the top two rows. Figure 1. You may calculate the number of items your subject-matter experts should write using a simple table. The constraints are shown in the top two rows. Ongoing Tests

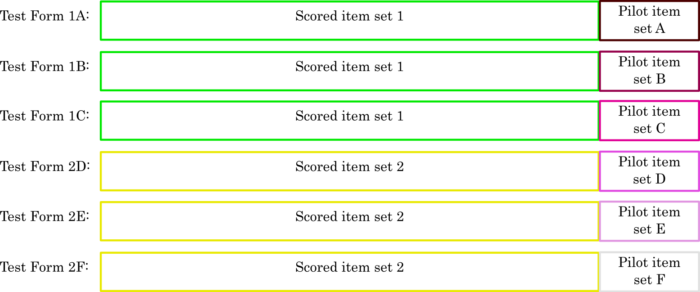

In inventory planning for ongoing testing, an additional level of attrition needs to be considered: what proportion of pretested items will be fit to use?  Figure 2. The set of items approved for operational use is a subset of items approved for pretest, which is in turn a subset of items written. Figure 2. The set of items approved for operational use is a subset of items approved for pretest, which is in turn a subset of items written. This is an area in which your psychometrician will probably thank you for allowing generous margins. As you pretest items, you may find that most do an adequate job measuring candidates’ knowledge. However, in generating equivalent forms, the test assembler (be it an individual or a computer) is looking for specific statistical attributes. To allow the best possible forms to be assembled, it is good to provide a generous buffer, pretesting perhaps twice as many items as are needed for operational use. How many items is that? Let’s say you choose to retire items after two years of operational (scored) use. Building on the previous example, where you required 18 scored (operational) Domain I items to build two forms, you will be using 9 new scored items every year. If you choose to pretest twice the number of items you ultimately need (to cover enemies and also give leeway in form assembly), you will need to approve 18 items for pretest. For that, you will need to write 24 items per year (18 ∕ 0.75 approval rate). As you build up your reserves, you will want to adjust these numbers downward. Note that to pretest large numbers of items, you normally couple each set of scored, operational items with different sets of unscored, pretest items.  Figure 3. You might pilot test 6 sets of items with only two base forms, but only if your candidate volume supports it. Figure 3. You might pilot test 6 sets of items with only two base forms, but only if your candidate volume supports it. Sensible Planning

Categorized in: Industry News |

||

Comments are closed here.